The nature of candidate and job discovery will change, improving what companies look for and how efficiently they can find it. The result will be better matches for both candidates and companies.

This is the third article in a three-part series on AI’s impact on the hiring process. The first article focused on how the resume itself will evolve given the incredible text processing capabilities of LLMs. In the second article, we saw how the same technology will change the dynamics of interviewing. The series concludes by understanding how these two changes, along with other newly unlocked capabilities, will alter the job search and hiring process. While there are potential issues for bias, if done right, AI can make the entire job market much more efficient benefiting candidates and companies alike.

This series began by looking at how the resume will evolve to include much more information in “The Future of the Resume, AI Interviews, and the Evolution of Hiring – Part 1: Less is More is Now More is More”. This will allow for better searching by using more data and being able to look beyond what’s under the streetlamp (see “The Streetlamp Effect in Hiring”), a perennial problem in hiring, allowing companies to hire for the skills they really want. In part 2, “The Future of the Resume, AI Interviews, and the Evolution of Hiring - Part 2: Agentic Interviewing," we discussed how the rise of AI-driven interviews, while frustrating at first because of the initial time required and likely asymmetry, will also lead to longer term efficiency and better selection matching by both sides. The question is then, what does this mean for the future of recruiting and the hiring process as a whole? Can we just upload everything and have an AI overlord match us a la the Black Mirror episode, “Hang the DJ” (where—spoiler alert—simulations are used to determine dating compatibility). Do we even need traditional job boards and recruiters, or can we just wait for a job offer to appear in our inbox like a magical letter from Hogwarts?

I started the first article with a memory of watching my father type out a paper resume and drop it in the mail. In theory, job boards should have eliminated this practice, since jobs and resumes should easily be matched in online systems and only a narrow set of qualified candidates should be invited to apply. In reality, this didn't happen because job boards can only match on little more than key words, and a true job match, even just for interest in a job (let alone a hiring match), depends on so much more. Today’s job boards do little more than extend the newspaper classified, but don’t provide much more in the way of filtering.

Job boards will soon have much deeper information, from the enhanced resumes noted in “Part 1: Less is More is Now More is More”, and the pre-recorded interviews discussed in “Part 2: Agentic Interviewing.” It’s possible that they can also pull from additional sources. For software developers, for example, they can look through your public git commits (the parts of the open-source code the candidate directly wrote and which are publicly visible). For a marketer, the AI agents might be able to see white papers she put out. An AI-driven job board can evaluate both the candidate and the company through all the public information.

But is that a good thing? A few days before the first article in this series got posted, OpenAI announced its own job platform. This might even go a step further; imagine if OpenAI’s proposed job board could review all your conversations with ChatGPT and take that into account. Are you insightful? Logical? Creative? Dogged? ChatGPT will know your traits through your interactions. That is quite a double-edged sword. (The actual OpenAI announcement seems to be more about a job board for AI-competent workers, but let’s consider it more generally as an AI-powered job board.)

Many years ago, MIT, which uses alumni to conduct interviews for undergraduate applicants, set the policy that the interviewer may not look up their interviewees on social media. This is for two reasons. First, what teenager hasn’t said or done something stupid at some point? They didn’t want such quotidian behavior to bias the interviewers. Second, not everyone uses social media to the same level, and applicants shouldn’t benefit or be penalized from their use or lack of it. Finally, who we are on social media isn’t always who we are in real life. More generally, the way you carry yourself around your parents, your co-workers, your friends from high school, the other parents at your kids’ school, your industry peers, etc. can be very different. For some, pulling in context from ChatGPT would be akin to pulling in context from a personal diary. Likewise, social media posts or other online activities aren’t always a clear picture of who someone is. In my hundreds of media and podcast appearances I present myself very formally, since that is consistent with the brand that goes with that part of work. The same is generally true of my articles and my LinkedIn posts (aside from occasional jokes like my music references in the second article in this series). However, with my co-workers I make lots of jokes (I even do a little standup comedy, but that’s not online at all so you’d never know). Pulling in just the public data gives a biased view. Or consider someone who is a strong advocate for a social cause like ending human trafficking. She might make passionate, emotional pitches online and the words and style she employs in that cause are very different from how she conveys herself as a hard-nosed, just-the-facts accountant at work.

I am very much for more information, but we do need to be careful about which information. These new job boards should only use what is explicitly given to it by the candidate, and not what else it can seek out that the candidate didn’t condone. It might be slightly different from the company’s side, since a company doesn’t have a “personal life” different from its corporate persona. Pulling in outside information, such as news reports about the company, seems less problematic. Generally, though, we will have more information about both sides, wherever the line is drawn; what’s important is to draw the line carefully and intentionally.

There’s also the more general risk of bias AI has from its training data (see “Redlining in the Twenty-First Century: Everything Everywhere All at Once”). For this to work well, we need to address this issue. I’ve only referenced this in the prior two articles but want to touch on it a little deeper. The article gives examples of how bias gets baked into AI, which is fairly well known although often ignored. If it’s not addressed by those more knowledgeable about this than I am, we’re going to continue to have problems.

Let’s assume, for the moment, that we somehow get rid of explicit bias, such as the classic bias for white-sounding names over names sounding African American. Also assume equivalent removal of bias against gender sexual orientation, religion, country of origin, etc. Again, this is no easy feat, but let’s assume it happens. There’s still a question of “diversity.” In this case it's the diversity of thought, which itself is a proxy, or perhaps said a derivative of, diversity of background.

Resumes conform to a narrow standard. If you make your resume unusual in format, both human reviewers ATS software can get confused. That probably hasn’t been too limiting for most people; but now we’re going beyond a resume.

Radio and TV in the US gave rise to a single program having national reach. The initial trend was to use the famed mid-Atlantic accent. It got supplanted by a “General American” accent. Cecil Adams of the Washington City Paper addresses this in the article, “Why Do Newscasters All Talk the Same?” In short, there was convergence towards the mean, or rather, expectation. If companies outsource their candidate-seeking AI agents to just a handful of third-party sources of such agents, we might find there’s a new type of bias. Maybe it’s for extroverts over introverts, or for left brained people over right brained people. The same bias could be for all jobs, or it could be different for particular roles. It may not be significant at first. But just as Adams noted that newer news anchors fit in by sounding like the veteran news anchors, reinforcing the accent standard, so, too might we see a convergence as candidates try to fit to the agents’ bias, even if small, which then creates a feedback loop reinforcing the bias. Again, bias here isn't about traditional classes like gender or race, but how people think and personality types. This, too, is a risk in and of itself, and it likely works as a reverse proxy for other types of bias.

It should be noted that the comments above, and this series generally, are primarily about white-collar jobs. For factory workers, truck drivers, and other traditional blue-collar jobs this matters much less. For the lower-end jobs, it still does come down primarily to key words; we take the term “key words” broadly meaning such things as job responsibilities, experience, location, and pay. For more advanced blue-collar jobs, such as high-tech manufacturing, AI could do some initial assessments.

Assessments is one area where AI can significantly improve the process. Years ago, Sun Microsystems (now owned by Oracle) created the Java Certified Architect exam. Software engineers flocked to it to demonstrate that they could be software architects. Because of the nature of online testing, it was limited to multiple choice questions. As such, it could only really assess if you know what tool performed which function, its strengths and limitations, and how to configure it. All were important for a software architect to know, but it was missing one key assessment: how well someone could actually architect a system. Such a question involves a complex answer that could not be evaluated by software. Consider a building architect you might hire; you certainly want her to know what type of foundation is needed for a twenty-story building and that can be assessed through multiple choice questions. But you also want to know if her building is well designed and matches your aesthetic style; that’s not something multiple choice can uncover. Or consider that software twenty years ago could test if you knew which character in Hamlet killed the king (a multiple-choice question), but it couldn’t easily judge your interpretation of themes in the play (which required parsing an essay answer, not easily done without LLMs).

LLMs can now start to do just that. As noted in the prior articles it can assess less tangible qualities like leadership and communication style. It can also evaluate topics like system design. I don’t think it’s there yet on these skills beyond a basic level, but it will likely advance. And again, most companies themselves are terrible at this; as such we can advance the standard in the industry and help companies hire for what they need, instead of what is easy. (See “The Streetlamp Effect in Hiring.”)

I don’t think AI will replace job boards, but they need to evolve quickly. Instead of 100 director of finance jobs and 10,000 candidates all of whom are looking for such a job, it will create a recommended shortlist of those candidates for the jobs (or jobs for those on the candidate side), making the market more efficient. Today job alerts are based on little more than keywords; in the future they will be based on many more dimensions making them much more useful. Like any tool, its capability will vary, being better for some roles than others. This will reduce some of the noise noted in the first article in this series.

For example, instead of simply finding “director of finance roles” a candidate can create an agent looking for director of finance roles at a company of 100-500 people at a B or C staged startup which does work from home one or two days a week, is in the health space, has a culture of community engagement, and a manager who leans forwards a visionary style. In theory links like number of days working from home, company size, and stage could all be dropdown fields which can be done by a classic boolean search. In reality, no one does this. The other attributes can only be evaluated by an LLM parsing significant text.

In a similar vein it can also address one of my biggest pet peeves: compensation expectations. Most companies ask this as “salary expectation” even though many people trade off salary against other compensation components like bonus and equity, as benefits. There’s also the risk of having to disclose first. Using simple contractual protocols, the candidate agent can reveal preferences if and only if the company agent agrees to reveal preferences as well. Since both agents can be built to only have one set of values, and not adjust based on whomever went first, we can create information parity. (In theory, this also can be done today, but for many reasons it is not done.)

What does this mean for recruiters? Recruiters spend significant time searching for candidates, doing candidate outreach, and then phone screening applicants. Much of that can be automated. The bottom fifty to seventy-five percent of recruiters will likely disappear. These are the spammers who just match keywords. (Despite having been a CTO for nearly two decades, I still get job spam emails and phone calls from recruiters for junior roles because I matched some technology keywords. If they even looked at my resume for a second, they wouldn’t have wasted their time or mine.)

The very top recruiters, especially those focused on senior IC (individual contributor) roles and leadership and management roles, will continue to provide value. These recruiters have an ability to understand the nuances of a company’s culture and needs and a candidate's ability beyond the keywords. They can see a candidate that may look like a square peg at first but realize he’s round and know how to convince the company to take a look. Likewise, they can entice candidates into roles that may not look right at first.

Job descriptions are often poorly written. As such they don’t attract the right candidates and don’t help the company assess the right skills. There’s an old saying in software: garbage in, garbage out. No matter how powerful your software, if you have bad data, you get bad results. We have decades of bad job descriptions, and this is what AI will train on. Until that gets fixed, we’ll need recruiters to fill in the gaps.

Traditionally recruiting firms have a competitive advantage because of their relationship to clients and/or their candidate pool. I think a new point of differentiation will grow from firms providing the type of assessments mentioned above, and those similar to the early AI-supported recruiting company mentioned in “Part 2: Agentic Interviewing.” Bigger firms, like the SHREK firms, will have an early advantage in developing these tools given their size and resources. Small firms will catch up as the tools become democratized or as they band together to invest in such tooling. (But again, if these tools become centralized, similar to how many landlords all use RealPage to suggest price increases, which creates a de facto antitrust practice, we’ll have the convergence mentioned earlier.)

There’s one other potential LLMs can unlock, which is a hiring feedback loop. In marketing, companies can tie back customers to their source, such as an online ad campaign, conference event, webinar, or other marketing program. By looking not just at the leads it produces, but the actual customers, and even a customer’s lifetime value, connected back to that marketing channel, the company can know which channel is most cost effective. I’ve noted that hiring is about sales and marketing and should be treated as such (see “HR Trains Your Sales & Marketing, but Do Your Sales & Marketing Train HR?”).

Unfortunately, most companies don’t hire at a large enough scale to allow them to do this. And it’s not so much about the source (i.e., specific job board), but other top of funnel attributes (meaning the attributes of job applicants).

There’s a scene in the TV show “Suits” in which a lawyer named Katrina needs to evaluate the junior lawyers to see who are the most inefficient and should be let go. She creates a list of parameters (presumably things like billable hours and cases won) and creates a stack ranked list. One character, Brain, is low on the list and set for termination. Donna, the intuitive character on the show, points out to Katrina that her list doesn’t take into account all that Brian does. She gives examples such as loyalty and dedication which are hard to quantitatively measure but very valued at the firm. He also billed fewer hours because he spent more time helping his peers on their cases, making them better (as well as their metrics, at the cost of his own). By looking under the streetlamp and measuring what was easy to measure Katrina missed what may have been more valuable to the firm and potentially selected the wrong people. (See “The Streetlamp Effect in Hiring.”)

What makes someone valuable to a firm isn’t always easy to measure. Qualities that help people be a cultural fit and be successful within the system, norms, and customs of a company are fuzzy at best. How do you measure attributes like initiative, teamwork, or communication? Most people don’t even think about it, let alone try to measure or hire for it. LLMs may help to change that.

Many HR assessments of people are qualitative, which makes it hard to look through thousands of reviews and uncover trends. In an ideal system companies would understand what traits work best for their company. At larger companies it may even vary by department. If the company knew what worked, it could look for such traits in candidates. But again, this is very difficult, so most companies don’t even try (actually, most companies haven’t even thought of this to try). LLMs can parse the reams of HR assessments and other data, find the desirable qualities and then help to craft the job description and evaluation metrics. It can go further by seeking such traits in candidates as the AI agents search for candidates and or signal for such traits in job posts, allowing candidates to be drawn to their roles.

The concept can be taken further. No hired candidate is absolutely perfect. When we hire someone, we often expect them to be strong in some areas, and weak in others. The enhanced information in the process outlined in this series can help to assess that. Then, the onboarding process can be further customized to help the new employee make the most of her strengths and shore up her weaknesses, reducing hiring risk and setting her up for success within the organization.

(Obviously, the concepts here can also be applied more generally to performance reviews and other types of assessments. Through AI analysis of emails and meetings companies can get more insight into their employees’ strengths and weaknesses, but this is getting beyond the scope of these articles.)

This isn’t something that will happen overnight. It requires conscious use of HR evaluations (which many smaller companies don’t do and which many bigger companies do poorly). Then that data needs to be fed into the job process. In the past it was nearly impossible due to the time it would take to process the data. LLMs can process it quickly, now making such a feedback system, one that goes from evaluations of employees to candidate filtering, finally possible.

Again, there’s a risk of bias. The virtuous cycle can become self-reinforcing. Homogeny has its place. Just as a couple with different love languages can feel ignored due to the miscommunication, so too can companies with different leadership or other work styles have problems, e.g. a non-political (as in office politics) person working in a very political office tends to do poorly. Having the same love language, or approach to work makes things easier. However, companies benefit from diversity, not only diversity of classic attributes like race and gender, but also diversity of thought. Such systems need to be designed to find the right balance for a company and if it’s done wrong, it will be done wrong fast and widespread.

Finally, such large-scale data across the job boards can find trends in the workplace. I’ve long argued that the educational system in the US and elsewhere, both K-12 and higher education, is misaligned to today’s job market. It does not teach the skills companies are seeking. The large-scale data from these next generation job boards might help universities wake up to this shortcoming.

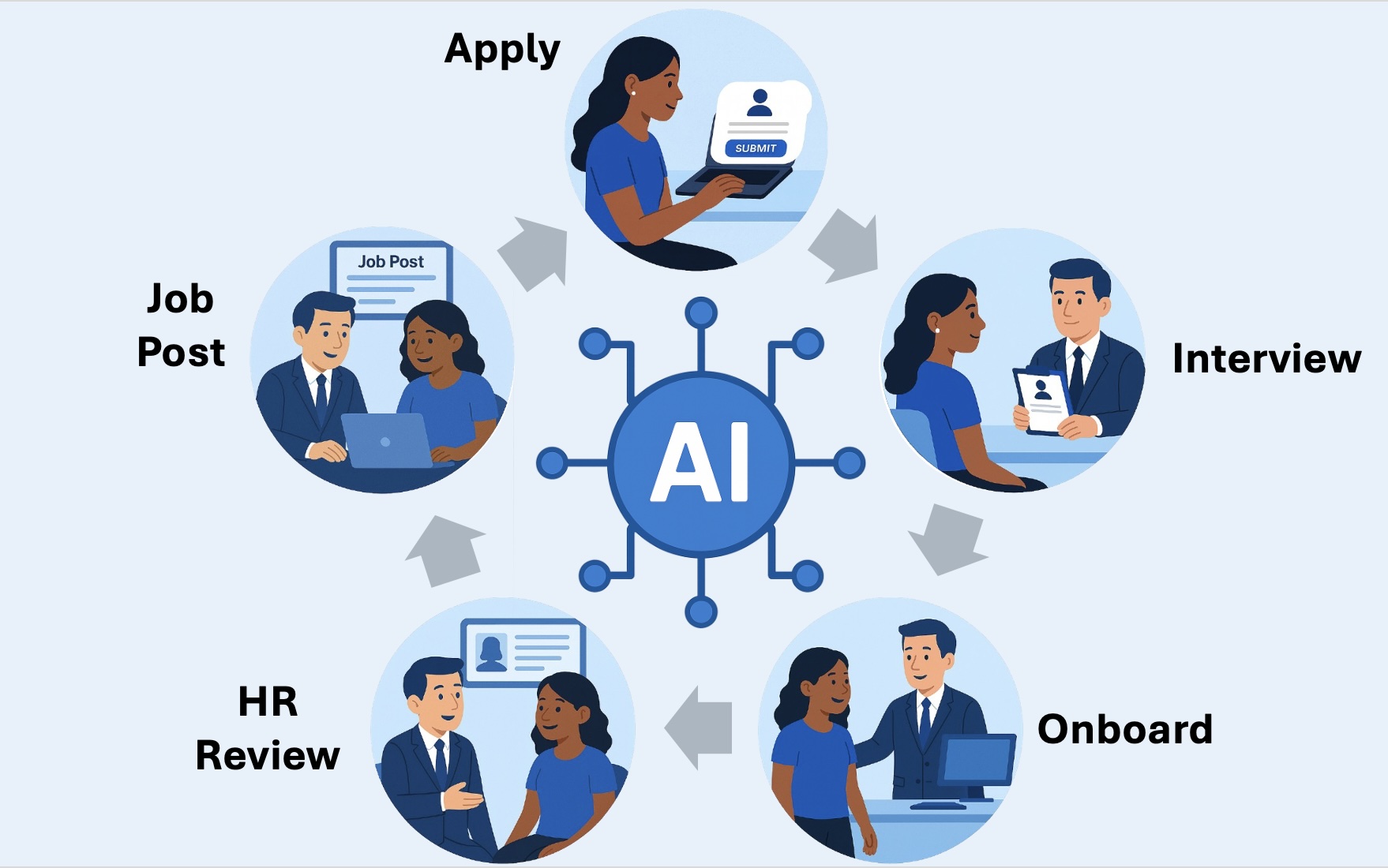

In this series we looked at a number of ways AI can improve the hiring process. There are first-order changes, like the ability to process more information. This will allow for longer resumes with more context and pre-recorded interviews that can be processed to quickly get additional context and improve matching. Some secondary effects include the pushback from candidates, especially during stronger labor markets where employees have the upper hand, to drive companies to do the same, providing more detailed information about the company and role. Taken further we can envision AI agents for both candidates and companies who can go far beyond today’s boolean searches. If tied to HR evaluation functions companies can truly create a full virtuous feedback cycle across the employment lifecycle.

There’s the potential for new winners among job boards and recruiting firms. Additionally, recruiters will significantly change their business operations. While most time is spent on mechanical tasks like outreach today, AI agents will take the bulk of that work. Their roles will shift to assess and connect higher order issues that keywords and AI agents can’t yet bridge; they’ll become trusted advisors to both parties as more of a classic matchmaker, something most recruiters aspire to today.

Across it all, legacy bias in hiring will translate to bias in AI reviews of candidates (see ”Redlining in the Twenty-First Century: Everything Everywhere All at Once”) so we must address that when using AI for hiring. Other types of group-think bias are possible, too and must be guarded against, as well.

There will likely be other secondary and tertiary effects, too, that will unfold as things evolve.

I believe the total impact of LLMs on hiring will be bigger than the impact of online job boards. This is because job boards simply made the cost of discovery and applying easier. LLMs make the cost of understanding easier. By taking advantage of the information processing capabilities of LLMs, more information can be used to create better hiring decisions for all.

It’s critical to learn about corporate culture before you accept a job offer but it can be awkward to raise such questions. Learn what to ask and how to ask it to avoid landing yourself in a bad situation.

Investing just a few hours per year will help you focus and advance in your career.

Groups with a high barrier to entry and high trust are often the most valuable groups to join.